This Week in AI: Momentum, Maturity, and Mounting Concerns

Global AI developments

Governments are moving from “talking about AI” to actually locking rules into place. South Korea just activated what it’s calling the world’s first comprehensive AI law, the AI Basic Act. It targets high-risk uses like healthcare, transport, nuclear safety, and finance, requires human oversight, and introduces fines for unlabeled AI-generated content—though there’s at least a one-year grace period while companies adapt (Reuters). In parallel, new state-level AI laws in the U.S. (notably in California and Texas) just came into force on January 1, 2026, while a recent federal executive order lays out a “minimally burdensome” national AI policy and explicitly looks for ways to constrain state rules seen as overreaching (King & Spalding). The result: the regulatory map is getting denser—and more contested.

At the global level, 2026 is shaping up as the year AI governance goes fully international. A UN-backed Global Dialogue on AI Governance and an independent scientific panel are giving almost every country a seat at the table to debate risks, norms, and coordination mechanisms (Atlantic Council). India is pushing hard to brand itself as a first-tier AI nation focused on deployment at scale rather than chasing headline “big models,” while the state of Telangana launched Aikam, a dedicated AI innovation entity, at Davos to attract global experimentation and investment (The Times of India). Layer onto that the EU AI Act moving into full force and strong warnings from ethicists that time is running short to get guardrails right, and you get a clear signal: AI is now treated as critical infrastructure, not a toy (Darden Report Online).

Business + innovation updates

On the consumer and device side, Apple is reportedly giving Siri a full rebuild into a chatbot-style assistant, powered under the hood by a customized version of Google’s Gemini 3 models. The project—codenamed “Campos”—is expected to land in upcoming OS releases and integrate deeply across iPhone, iPad, and Mac, with both voice and text interactions (Reuters). For small businesses and creators, that likely means more “native” AI inside the devices they already use every day, not just separate apps. Over in hardware and industry, NVIDIA continues to push on specialized AI: it’s launching new Clara models to accelerate drug discovery and healthcare,(NVIDIA Blog) while Google DeepMind and Boston Dynamics are testing Gemini-powered Atlas robots that can interpret language and perform non-scripted industrial tasks (Champaign Magazine). The through-line: AI is moving from screens into physical workflows.

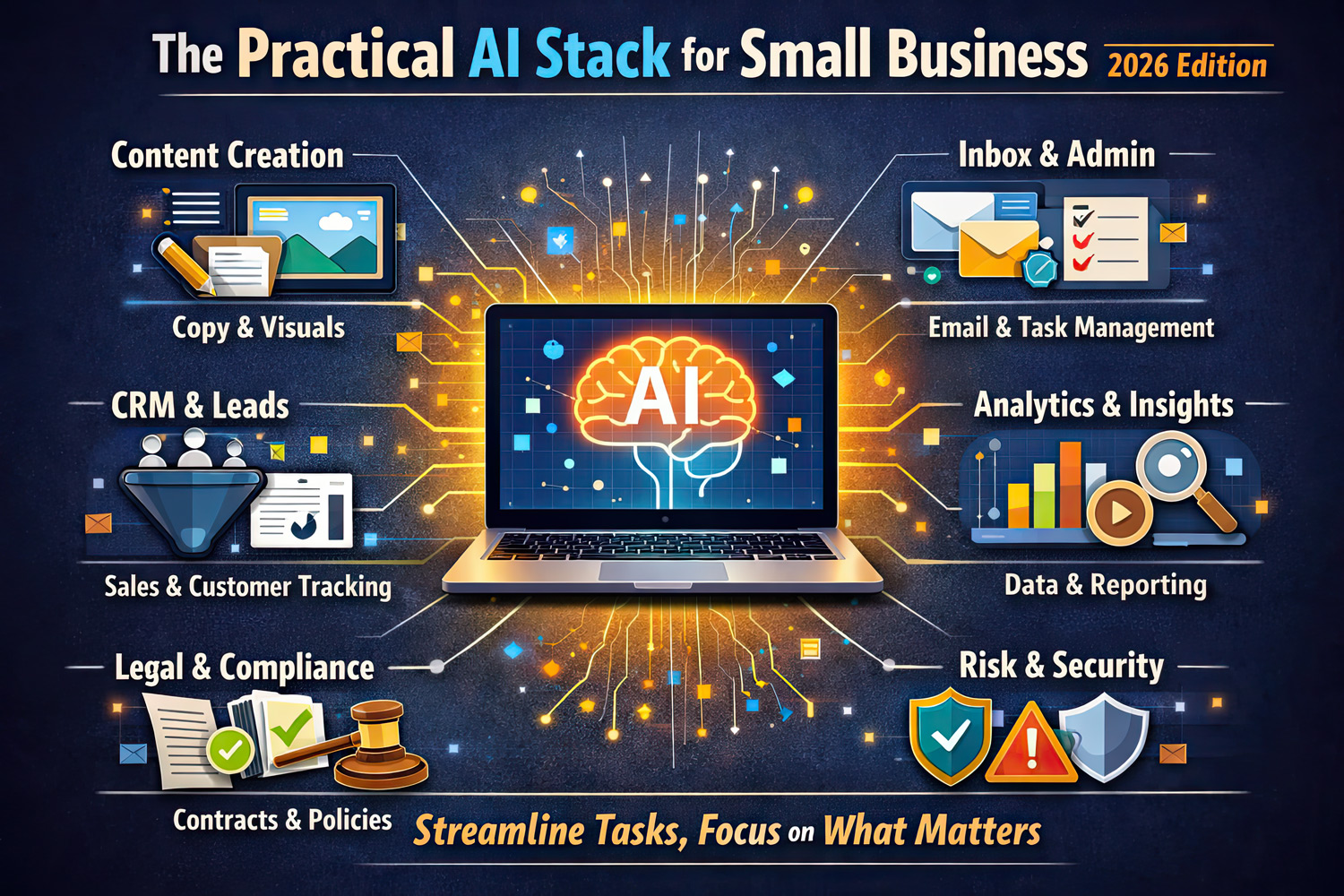

For small businesses and solopreneurs, the market is exploding with “practical stack” tools rather than just showy demos. Recent 2026 roundups now treat AI as the operating system of modern business—covering everything from marketing automation and customer support to risk management and fraud detection (Medium). You’re seeing the same pattern across multiple independent guides: a core mix of AI for content (copy + visuals), inbox and admin triage, CRM/lead handling, analytics, and basic legal/compliance checks. The opportunity for small operators isn’t to chase every shiny new app—it’s to deliberately assemble a lean toolkit that removes busywork and frees up more time for high-value thinking and creative work.

The top AI fear/controversy of the week

This week’s biggest concern cluster revolves around harm and misuse—especially where AI intersects with safety, deepfakes, and guardrails. In the U.S., legal analysts are warning that new state laws plus the federal executive order will kick off years of battles over what AI systems are allowed to say, track, or block, and who’s responsible when things go wrong (King & Spalding). A January legal report highlights lawsuits ranging from copyright fights over AI-generated works to a wrongful-death case that alleges an AI assistant amplified paranoid delusions and contributed to a murder-suicide—raising tough questions about product design, user monitoring, and duty of care (JD Supra). In parallel, global backlash against deepfake misuse—including non-consensual sexualized imagery—has already forced some high-profile models to restrict image generation capabilities after months of abuse (etcjournal.com).

The broader ethical conversation is sharpening: serious voices in academia and industry are calling ethics the defining issue for AI’s future and warning that time is short to address job displacement, autonomy, bias, and misinformation at scale (Darden Report Online). The encouraging piece is that these worries are directly driving concrete action: more robust safety requirements in law, mandatory guardrails for conversational systems, and a push for transparent labeling and human oversight in high-impact domains. None of this eliminates risk—but it does mean the “wild west” phase is ending, and thoughtful operators who build with safety and clarity in mind will be on the right side of where this is heading.